Please join Yeosijae as we build a brighter future for Korea. Create your account to participate various events organized by Yeosijae.

- Insights

- |

- Global Order and Cooperation

- Digital Society

Forum <The Age of AI and the Future of Global Security>

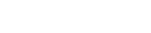

On April 3, the Taejae Future Consensus Institute (Taejae FCI) hosted a public forum entitled “The Age of AI and the Future of Global Security.”

This event is a third entry in the Institute’s flagship AI forum series which began last year. Ban Ki-moon, the eighth UN Secretary-General, delivered the keynote address and Kim Yong-Hak, former President of Yonsei University, served as moderator. The panelists were: Kim Won-soo (Chair, International Advisory Board, Taejae FCI; former UN High Representative for Disarmament Affairs), Graham Webster (Editor-in-Chief, DigiChina Project, Stanford University), Lu Chuanying (Senior Research Fellow, Shanghai Institutes for International Studies), and Yoo Yong-Won (military journalist).

In his welcome address, President Kim Sung-Hwan of the Taejae FCI stressed that artificial intelligence could pose bigger security threats than nuclear weapons, as demonstrated by the AI-powered drones deployed without a human operator in the Russia-Ukraine war. He added that the international community must work together to build a global governance framework capable of minimizing the security risks of AI.

1. Keynote Address (Ban Ki-moon)

AI is a “double-edged sword.”

AI can make human lives both better and worse.

The breakneck speed of AI development makes it extremely difficult for humans to remain in control of the technology. The absence of global consensus, along with limited capabilities of national governments, proves to be a major hurdle to addressing associated risks. This means universal regulation and international cooperation are essential for sustainable development and use of AI. It is a global challenge, therefore the solution should be global, as well. As suggested by the Elders, a new multilateral institution must be created to oversee AI-related safety protocols and activities.

2. Presentation (Kim Won-soo)

Global consensus must be built around “responsible state behavior in the military use of AI.”

A “normative deficit,” a phenomenon where normative and regulatory responses lag considerably behind the pace of technological advancement, could lead to catastrophic consequences.

Jensen Huang, CEO of NVIDIA, warned last month that artificial general intelligence (AGI), an AI system with superhuman capabilities, could emerge within five years. The so-called Gladstone AI Action Plan—a recent report commissioned by the US Department of State and produced by a private, for-profit entity called Gladstone AI Inc.—underscores the risk that AI could be weaponized to have “weapons of mass destruction (WMD)-like and WMD-enabling” capabilities. Additionally, a simulated test by the US Air Force revealed last year that an AI-powered drone could attack friendlies if they stood in its way during its mission.

Despite these issues, our response remains limited, happening mostly at national levels. The technology competition is fostering a “race to the bottom,” whereby regulatory easing could be encouraged. The national measures proposed thus far are a long way from a widely accepted international norm. Recent initiatives such as REAIM 2023 and the UN General Assembly’s resolution on AI are insufficient at best.

In an effort to minimize the risks associated with the rapid advancement of AI, proposals have been made to establish an International AI Agency (IAIA), an international institution similar to IAEA. But before we consider creating an institution, we must first take into account the peculiar characteristics of AI such as intangibility, diffuseness, and lower entry barrier to information. Building an international institution for AI governance is a daunting yet urgent task, especially given the rapid pace of development.

To that end, a global consensus must be formed through multilateral efforts integrating ▲Track 1 public sector initiatives, ▲Track 1.5 public-private initiatives like the AI Summit and the REAIM Summit, and ▲Track 2 private sector initiatives like the Trust and Safety Summit. Once global consensus is reached, we will need a roadmap for creating global norms and institutions.

As a first step, we should identify issues that the whole international community can get behind. “Responsible state behavior to minimize the security risks posed by large-scale AI in the military domain” could be one of those areas of common interest. Starting with this agenda, we can build a foundation for international cooperation on safe development and use of AI.

3. Discussion

① Responsible State Behavior in the Military Domain

[Moderator] Kim Yong-Hak: Despite its potential benefits, AI poses a significant threat to global security. Particularly in capitalist economies, AI is a “commodity” where many diverse interests intertwine, thus making it hard to reach an agreement on the question of governance. On the other hand, consensus can be found relatively easily in the security and military domains. This could serve as a starting point for broader regulatory expansion.

Meanwhile, competitive pressure within the AI industry renders it difficult to find common ground not only between the United States and China, but also among industry players. If the United States is to assert leadership in this area, domestic consensus is a necessary condition. What can be done to bring about a global consensus when there is no agreement nationally.

Graham Webster: Given the upcoming presidential elections and the growing political instability in the United States, the world does not have to wait for it to find a national consensus. Moreover, we currently have a very limited understanding of the impact of AI in the United States. Domestic consensus is not a prerequisite for the US government to engage other nations on AI regulation. The latest dialogue between Washington and Beijing on AI security bodes well for future consensus building efforts.

Kim Yong-Hak: Since the San Francisco summit, the United States and China appear to have a shared interest in limiting AI use, not only in nuclear command and control systems but in lethal autonomous weapons as well. However, several obstacles will need to be overcome to reach a substantive agreement. How do you think China can contribute to advancing this agenda? How can we expand conversations in the private sector to formal dialogues between governments?

Lu Chuanying: A two-track approach involving both private and government actors could be a viable solution. Even when the two governments were at odds, think tanks from both sides continued their dialogue. It is crucial to continue this “knowledge sharing” between private actors. And the governments should try to build upon it through official channels.

Kim Yong-Hak: Given its close ties to both the United States and China, what can South Korea do to help them find common ground on AI governance?

Yoo Yong-Won: Hosting this year’s REAIM Summit and AI Safety Summit is an important opportunity for South Korea. It should be noted that South Korea, with its distinctive geopolitical standing, can play an impactful role on the global stage. As such, South Korea should try to facilitate participation of national experts in the upcoming conferences and galvanize the overall discussions there.

Kim Yong-Hak: There are many international initiatives in both the public and private sectors. Aside from the intrinsic challenges of AI technology, what constraints arise from the underlying dynamics of international politics? Also, what can be done to unite these diverse initiatives into a single institutional framework?

Kim Won-soo: There are two types of structural challenges, the so-called “technology and value divides.” Technologically advanced countries want to pursue further development, while less advanced ones seek to regulate it, just as with nuclear weapons. In terms of the value divide, China prefers state control, while the United States favors market-oriented solutions. It is extremely difficult to bridge this gap. Thus, the priority should be on finding a delicate balance between the two. Then a UN-led regime can follow.

② Artificial General Intelligence (AGI)

Kim Yong-Hak: The world is worried about the potential emergence of a dangerous AGI, an AI system akin to Skynet from the Terminator film franchise. What would be some defining characteristics of an AI that can threaten the very existence of humanity? What can we do to guard against its advent?

Graham Webster: No one knows when AGI will arrive. But it is important to take a critical and cautious approach because those who predict its imminent arrival may have ulterior motives like financial gains. As technology advances and risks increase, regulations and rules must keep pace, and ethical considerations must not be overlooked.

Lu Chuanying: It is important to understand the difference between AI “safety” and “security.” Functional definitions must be agreed upon first to facilitate consensus building between the United States and China. Additionally, various efforts, including dialogues to address the risks of AI, are underway, so there’s no need to be overly pessimistic.

Yoo Yong-Won: Historically, military technologies have achieved notably rapid advancement when deployed during wartime. Similarly, AI is currently being tested in various combat zones, therefore it needs to be monitored closely to keep it from evolving at an uncontrollable pace.

Kim Won-soo: AI making tactical and strategic decisions in real combat could pose the utmost threat. Focusing on such worst-case scenarios and developing appropriate countermeasures could be the first step toward cooperation between the United States and China.

< Copyright holder © TAEJAE FUTURE CONSENSUS INSTITUTE, Not available for redistribution >